1 min read

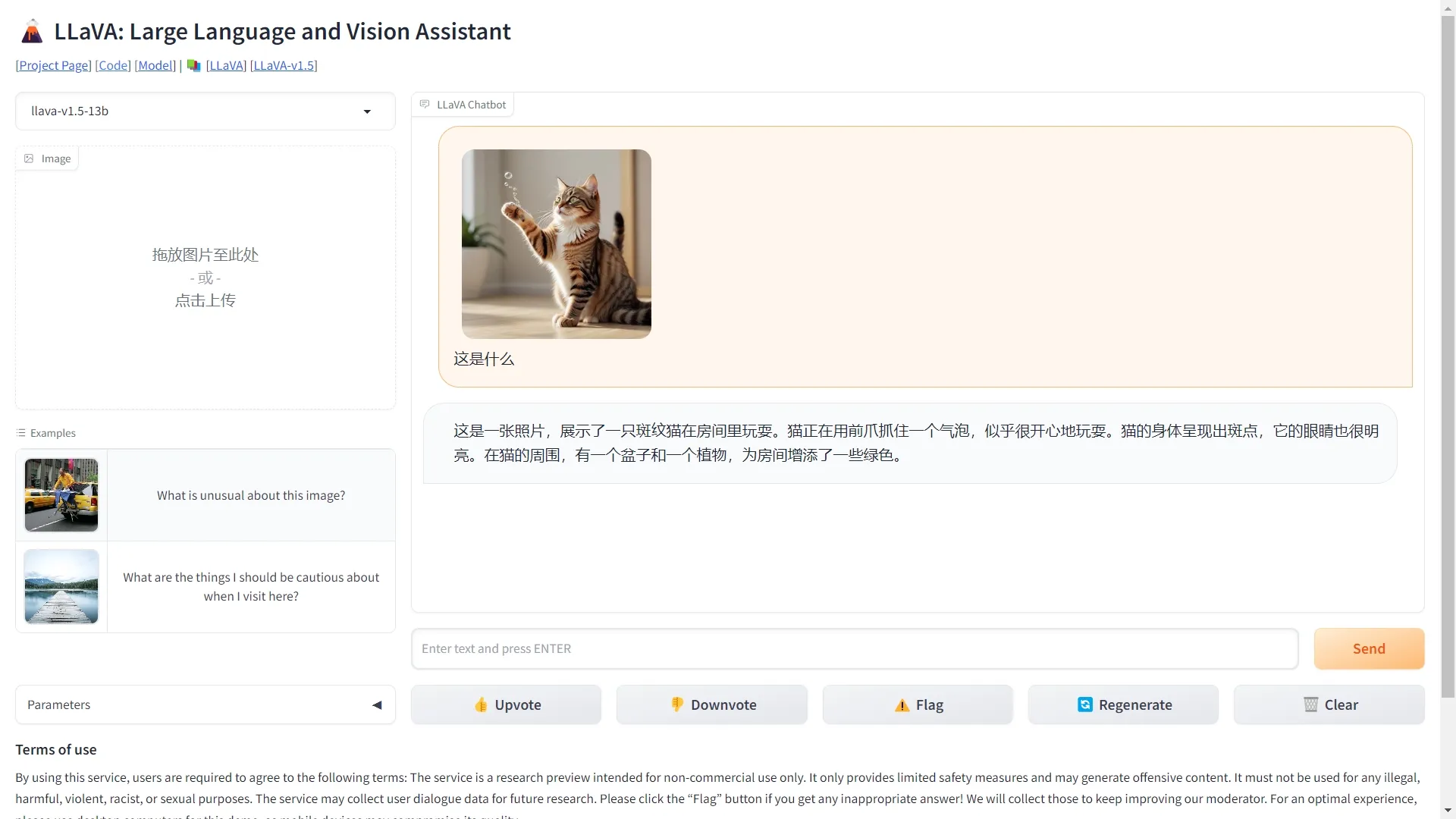

AI Image LLaVA (Large Language and Vision Assistant) is a large multi-modal model jointly released by researchers from the University of Wisconsin-Madison, Microsoft Research, and Columbia University. This model exhibits something approaching multimodal GPT-4 Graphics and text understanding ability: Obtained a relative score of 85.1% relative to GPT-4. When fine-tuned on Science QA, LLaVA and GPT-4 The synergy achieved a new SoTA with 92.53% accuracy.